Multi-Agent Robot Learning and Control

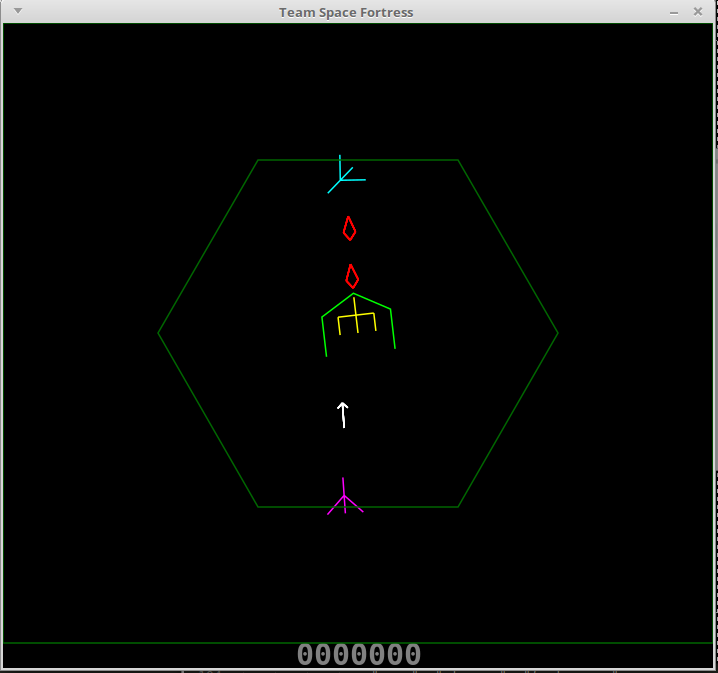

Enabling robots to collaborate with humans requires both predicting the human's behavior, and determining appropriate robot responses. Imitation learning, or learning from demonstrations of human-human collaboration is a sample efficient way to achieve the same. Prior work on human-robot collaboration using imitation learning is mostly centered on the underlying assumption that human behaviours in a particular situation can be narrowed down to a specific expert policy. On the other hand, research that deals with varying human behaviour does not talk about scenarios where humans and robots collaborate without direct communication. We are working on a novel framework to train an agent that must learn to make intelligent observations and take sequential decisions in order to collaborate effectively with a human and work towards the same goal, while taking into account the latent factors present in expert demonstrations.

Enabling robots to collaborate with humans requires both predicting the human's behavior, and determining appropriate robot responses. Imitation learning, or learning from demonstrations of human-human collaboration is a sample efficient way to achieve the same. Prior work on human-robot collaboration using imitation learning is mostly centered on the underlying assumption that human behaviours in a particular situation can be narrowed down to a specific expert policy. On the other hand, research that deals with varying human behaviour does not talk about scenarios where humans and robots collaborate without direct communication. We are working on a novel framework to train an agent that must learn to make intelligent observations and take sequential decisions in order to collaborate effectively with a human and work towards the same goal, while taking into account the latent factors present in expert demonstrations.Work Referred:

Advisors: Dr. Katia Sycara, Dr. Dana Hughes

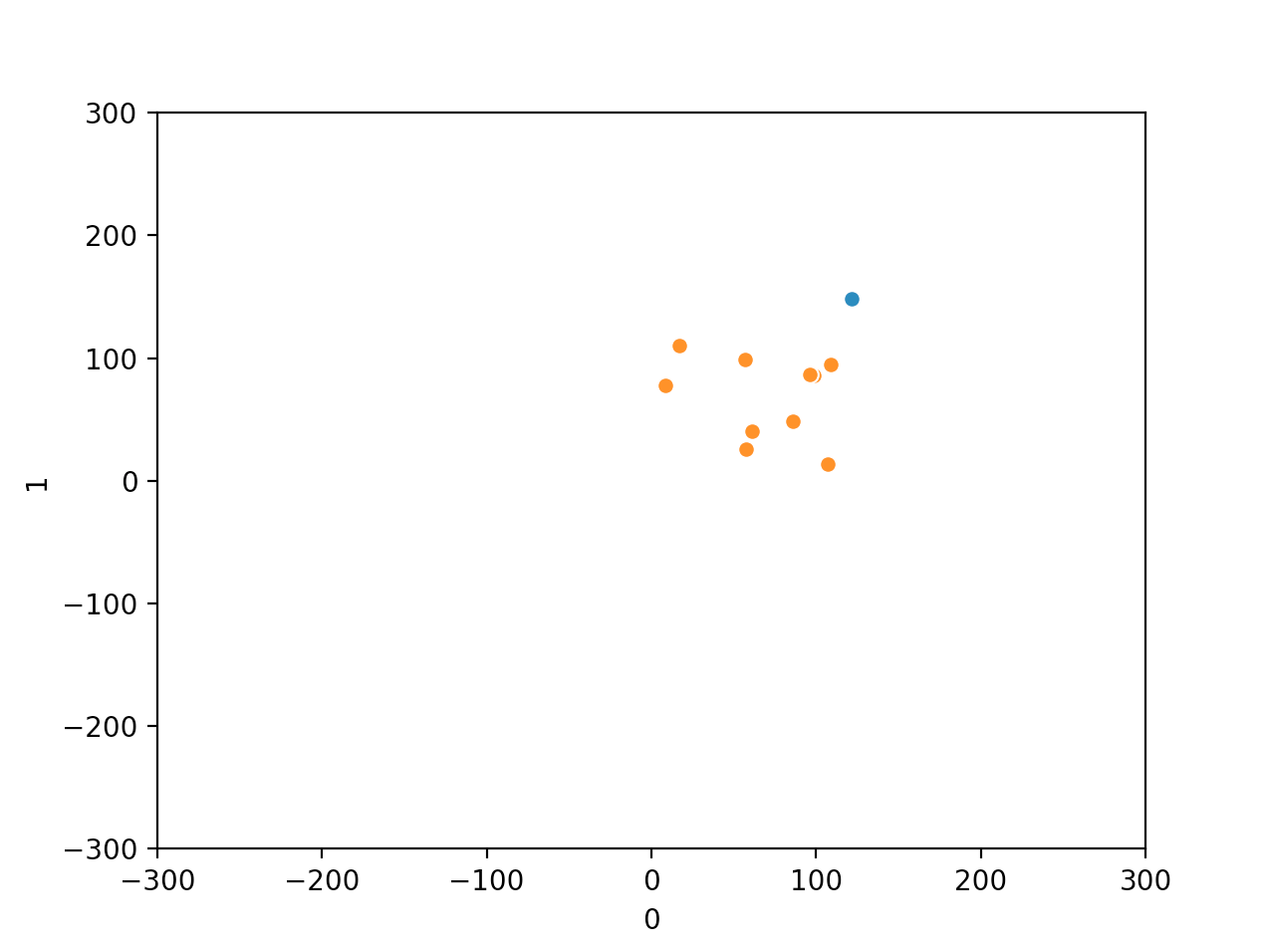

Swarms are decentralized systems wherein multiple agents follow common control laws and behave as a collective entity. In nature, swarm behaviour can be observed in various animals, insects, fish etc. for foraging, or navigation purposes. We consider Strombom's shepherding model which was proposed in 2014 to model collecting and herding actions of a shepherd (predator) driving sheep (agents) towards a destination. We employ reinforcement learning techniques to achieve better results as compared to the proposed heuristic approach by Strombom et al.

Swarms are decentralized systems wherein multiple agents follow common control laws and behave as a collective entity. In nature, swarm behaviour can be observed in various animals, insects, fish etc. for foraging, or navigation purposes. We consider Strombom's shepherding model which was proposed in 2014 to model collecting and herding actions of a shepherd (predator) driving sheep (agents) towards a destination. We employ reinforcement learning techniques to achieve better results as compared to the proposed heuristic approach by Strombom et al. Work Referred: Solving the shepherding problem: heuristics for herding autonomous, interacting agents [paper]

Advisor: Dr. P. B. Sujit

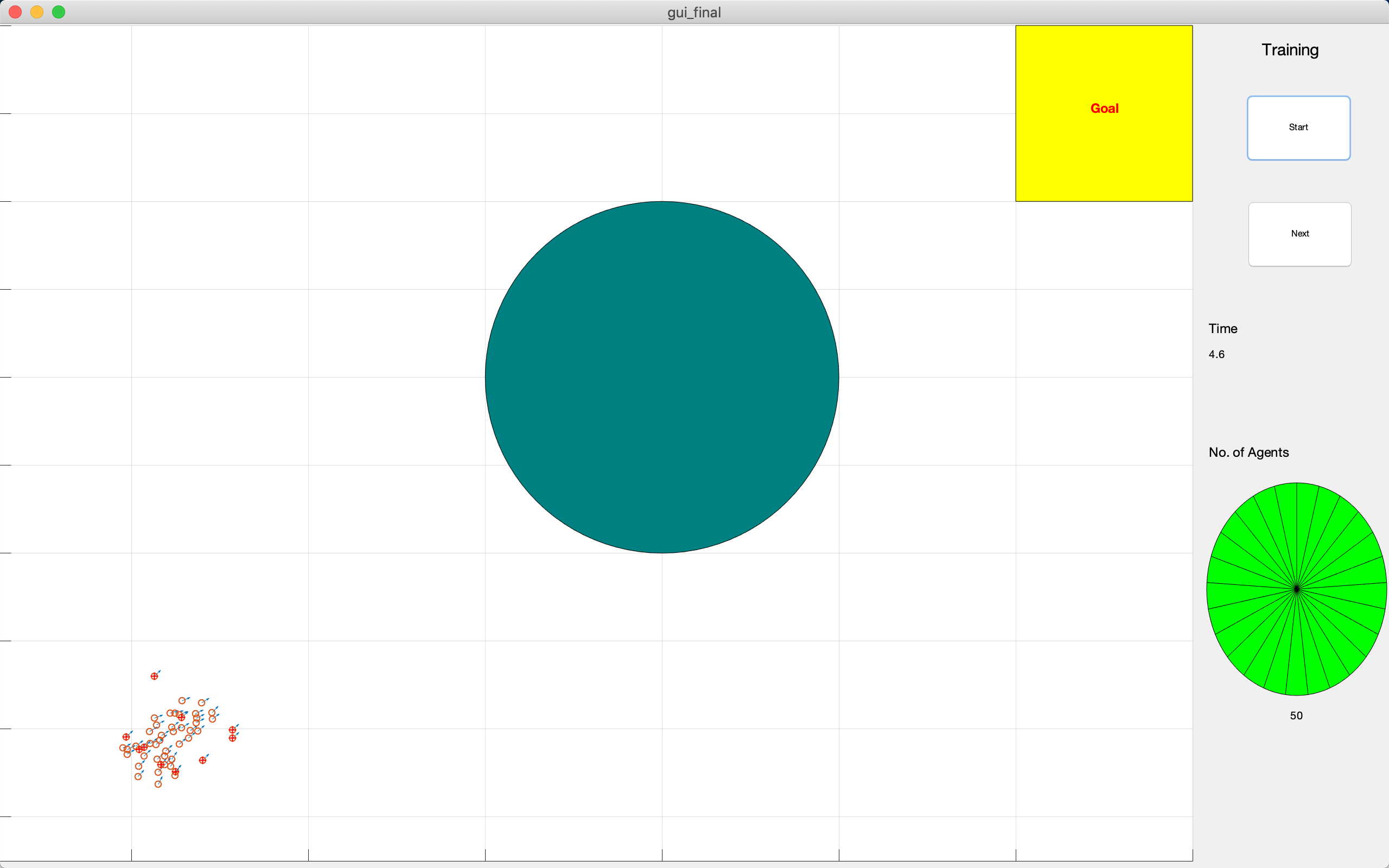

Human control of a robotic swarm entails selecting a few influential leaders who can steer the collective efficiently and robustly. We are conducting experiments to vary number of leaders and leader positions in simulated robotic swarms of different sizes, and assess their effect on steering effectiveness and energy expenditure. We are analyzing the effect of placing leaders in the front, middle, and periphery, on the time to converge and lateral acceleration of a swarm of robotic agents as it performs a single turn to reach the desired goal direction. The basic results are published in the paper mentioned below. We are conducting follow up experiments for the same before submitting the work to a journal shortly.

Human control of a robotic swarm entails selecting a few influential leaders who can steer the collective efficiently and robustly. We are conducting experiments to vary number of leaders and leader positions in simulated robotic swarms of different sizes, and assess their effect on steering effectiveness and energy expenditure. We are analyzing the effect of placing leaders in the front, middle, and periphery, on the time to converge and lateral acceleration of a swarm of robotic agents as it performs a single turn to reach the desired goal direction. The basic results are published in the paper mentioned below. We are conducting follow up experiments for the same before submitting the work to a journal shortly.Work Referred: Effect of Leader Placement on Robotic Swarm Control [paper]

Advisor: Dr. P. B. Sujit, Dr. Sachit Butail

Robotic Systems

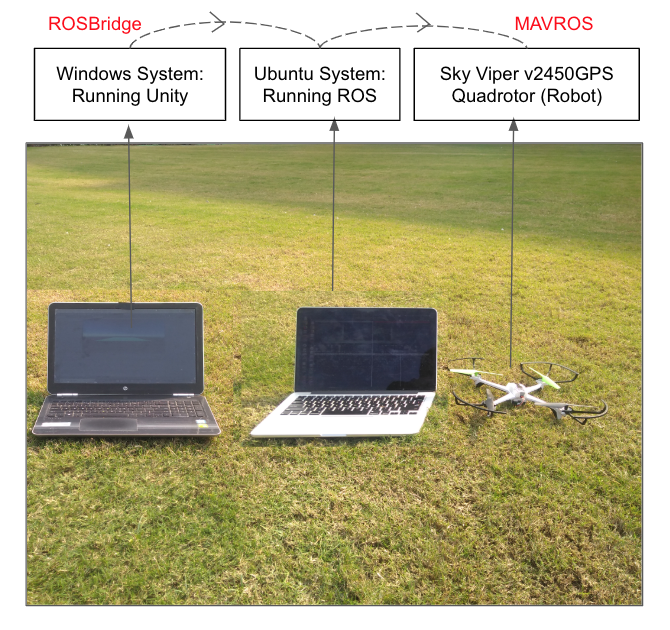

Robotic swarms do not have intelligence for high level tasks. We need human input for effective mission execution and also for applications where physical presence of a human isn’t possible. Virtual reality provides an interactive environment between humans and robots, in a way that is very effective and close to real world scenarios. We used an HTC Vive headset and a Unity environment to control a shepherd model of a swarm, led by a SkyViper v2450GPS quadrotor. Due to infrastructure issues, the remainder of the swarm was simulated virtually. We were able to successfully control the leader virtually and observe the motion of the swarm accordingly. We will be conducting a few more experiments before submitting the work shortly.

Robotic swarms do not have intelligence for high level tasks. We need human input for effective mission execution and also for applications where physical presence of a human isn’t possible. Virtual reality provides an interactive environment between humans and robots, in a way that is very effective and close to real world scenarios. We used an HTC Vive headset and a Unity environment to control a shepherd model of a swarm, led by a SkyViper v2450GPS quadrotor. Due to infrastructure issues, the remainder of the swarm was simulated virtually. We were able to successfully control the leader virtually and observe the motion of the swarm accordingly. We will be conducting a few more experiments before submitting the work shortly. Work Referred: Human-Robot Interaction: A Survey [paper]

Advisor: Dr. P. B. Sujit

The aim of this project was to employ imitation learning and use a fisheye camera as opposed to a set of three frontal cameras (left, right and center)for improving vision in self driving cars. Data was collected in the form of humans controlling the ground rover and the human steering angle was the only training signal. We built the entire system hardware on our own using an Nvidia Jetson TX2 module as its brain. The camera used was Point Grey Chameleon 3. We had preliminary results from a previous dataset. However, due to infrastructure and resource issues, we had to temporarily discontinue the project.

The aim of this project was to employ imitation learning and use a fisheye camera as opposed to a set of three frontal cameras (left, right and center)for improving vision in self driving cars. Data was collected in the form of humans controlling the ground rover and the human steering angle was the only training signal. We built the entire system hardware on our own using an Nvidia Jetson TX2 module as its brain. The camera used was Point Grey Chameleon 3. We had preliminary results from a previous dataset. However, due to infrastructure and resource issues, we had to temporarily discontinue the project. Work Referred: End to End Learning for Self-Driving Cars [paper]

Advisor: Dr. Chetan Arora

Human-Technology Interaction

Publication: Building Sociality through Sharing: Seniors' Perspectives on Misinformation

Advisor: Dr. Shriram Venkatraman

Publication: Digital Wallets ‘Turning a Corner’ for Financial Inclusion: A Study of Everyday PayTM Practices in India

Advisor: Dr. Nimmi Rangaswamy